Machine learning

SPU community shares their guidance about using ChatGPT in academics

February 24, 2023

A Conversation with ChatGPT:

The Turing test is a test of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. It was proposed by British mathematician and computer scientist Alan Turing in 1950.

The test works by having a human judge engage in a natural language conversation with both a machine and another human without knowing which is which. The goal of the machine is to convince the judge that it is the human, while the human’s goal is to convince the judge that they are the human. If the machine is able to successfully convince the judge that it is the human more often than not, then it can be said to have passed the Turing test and exhibited a level of artificial intelligence that is indistinguishable from human intelligence.

As an AI language model, ChatGPT can be seen as an implementation of the Turing test, in that it is designed to engage in natural language conversations with humans in a way that is indistinguishable from a human. While ChatGPT does not attempt to convince a human judge that it is a human, its goal is to provide responses that are as natural, coherent and relevant as possible, such that users may feel like they are conversing with a real person.

So, say that we were to perform a Turing test right now. This article, up until this point, has been completely written with ChatGPT.

Language is a human tool people use to communicate with each other. In online formats, books and print, words conduct information and ideas. Behind the words, people tend to consider who is writing them. Authorship is important. Yet now, people have to wonder if the author is anybody at all.

ChatGPT can essentially write essays if asked. Seattle Pacific University, just as much as any other educational institution, values academic integrity. So, it leaves all educators wondering, is this the end of the college essay?

Not necessarily, says assistant professor of English, Traynor Hansen.

“Writing as a process of discovery and knowledge creation I think is important. When it comes to Chat GPT, Chat GPT is really good at putting together clear sentences, and it’s good at mining existing knowledge that’s readily available, but it’s not good at careful and nuanced thinking and at prompting discovery,” Hansen said.

At most, ChatGPT fashions essays to the ability of a sophomore in highschool. Hansen teaches many of the Writing 1000 and 1100 classes at SPU, teaching students the expectations for college-level writing across many disciplines. He is also the director of campus writing, and in wake of recent controversies surrounding AI, supports faculty in how they assign, teach and assess writing. All to say, the goal is to write better.

“But what do you mean by ‘write better?’ Do you mean more polished sentences? Or do you mean more interesting thinking? I think there’s potential in both of those places, but the paths are pointing in different directions,” Hansen said. “For me, someone who thinks about writing pedagogy, I emphasize and talk about writing as a process. What ChatGPT could do is to take a crappy draft and make it seem polished, but it will still be a crappy draft even if it’s polished.”

A technological utopia places humanity at the center of it all. In a dystopia, our humanness is lost. Writing exists as a multifarious means of information and craft. It is both objective and subjective at the same time.

In the 18th century, between 1768 and 1774, Pierre Jaquet-Droz built three doll automata: The Musician, The Draughtsman and The Writer. Of them all, The Writer was the most complicated and was able to write custom text up to 40 letters long. He would dip a goose feather into ink, shake his wrist to prevent it from splattering and trail his eyes along the text being written.

The word “automaton” is the latinization of the greek word αὐτόματον, “acting of one’s own will.” Automatons and animatronics have no will. They fail the Turing test. Yet, marveling at Jaquet-Droz’s inventions, their toy faces and careful articulations, we wonder if our tools will replace more than the tasks.

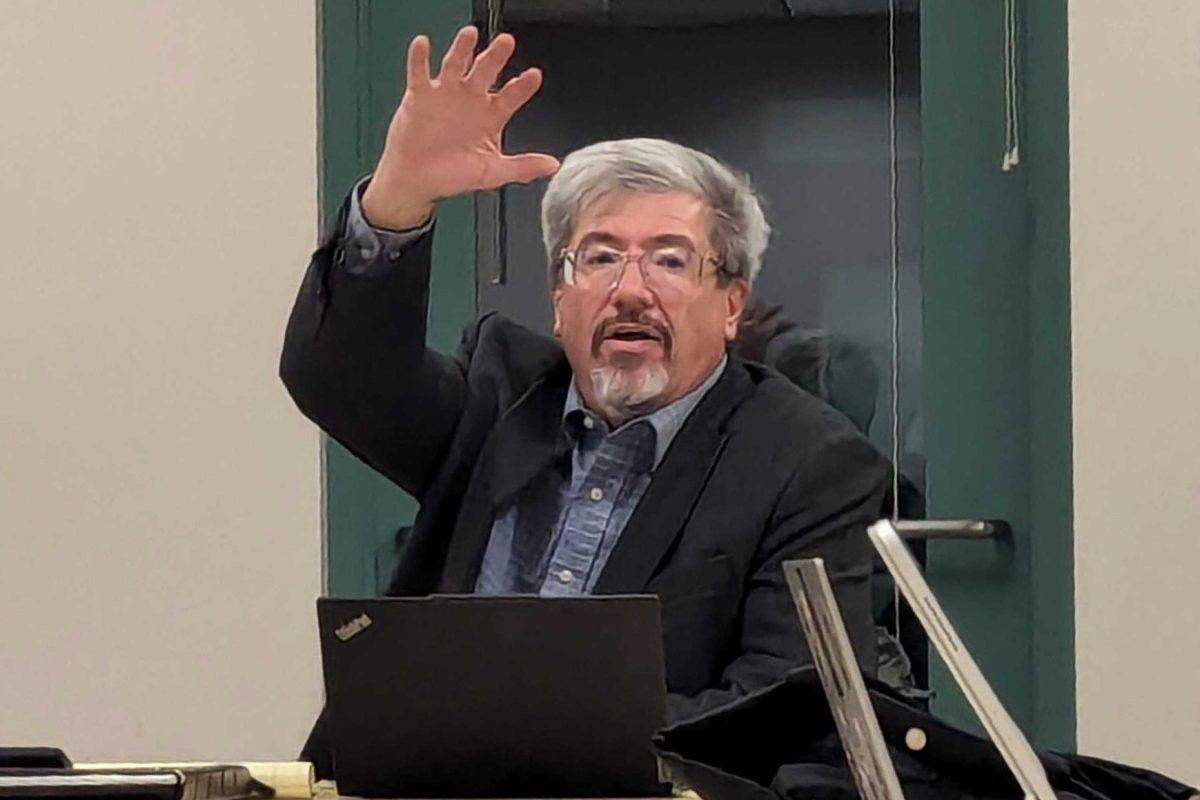

Carlos Arias is an assistant professor of computer science and chair of the department. As someone who is deeply invested in the world of programming, he assures students that human ingenuity is irreplaceable.

“The question is going to be, what is the value you can bring into this profession? Is it something that can be automated? It’s going to be automated,” Arias said. “What is expected in the world of software development is that artificial intelligence will take over the menial duties, the stuff that is easy and repetitive. Artificial intelligence is going to take over that, but we are still better at being creative.”

Creativity is the basis for all things new and valuable. Despite the novelty of AI, it can only understand how things are, not how they could be.

“What am I bringing? Am I bringing emotion? AI cannot bring emotion. It is just a computer. And this becomes more complicated when you ask, can [something] bring emotion if it was created by an emotionless entity? Where does emotion come from?” Arias said.

ChatGPT was trained on 570 gigabytes of text based data from the internet such as books, websites, scholarly publications and articles. As a model of language, ChatGPT seeks to create human-like text.

Language has rules. Structurally and grammatically, subjects follow verbs in English. If we are talking about a car, for example, we might say “the car drives,” assuming we know about cars.

But what if the car kills? Under what circumstances would we think that? Why would we think that? The car drives because we know cars drive, obviously. Yet we also know that cars can honk and break too. And cars can also kill people, unfortunately. So, if we only knew that people die in serious accidents and nothing else, we would say that the car kills. AI can only ever know what it is trained on.

Garrett Crites is a third year computer science major and speaks on the epistemological concern of AI’s inability to tell the difference between data and knowledge.

“Any kind of model is inherently gonna have the bias of the people who created it or the sources that it’s following to gather its information,” Crites said. “For example, if you have a language model that is trained only on sources that include terrible stereotypes of marginalized individuals or fabricated evidence for an event, it’s not going to differentiate between that or what we would consider a credible source.”

As scholars, students and faculty discern information and create knowledge. Many of our ideas are generated with tools of assistance such as spellcheck, thesauruses, citation machines and the internet. The tools are useful, however, only when they are used responsibly.

“When it comes to our general idea of what it means to be a student, I think it really comes into question how we handle our classrooms pedagogically. If it’s all about memorizing facts and regurgitating them, ChatGPT is going to mess with that,” Crites said.

If used wrongly, students will see ChatGPT as an opportunity to try less. Educators are thus called to become creative in their curriculums.

Prompts such as “Explain osmosis” or “Summarize the American Revolution,” are easily undermined by ChatGPT. But a question such as “Artistically represent osmosis,” or “Explain how the American Revolution’s effects shape your identity in America,” cannot be completed with ChatGPT, only assisted. (Unless it is asked to write a poem about Osmosis.)

“Be curious but skeptical. I don’t think ChatGPT and whatever comes next is anything to fear, and I don’t think it’s something that we can block. The best path is for us to be wise and learn how to use it. How we choose to include it in our classes or not include it in our classes,” Hansen said. “I hope that it would be a common practice for papers submitted for a class or academic contexts where one’s own intellectual property is being put forward, if AI has been used, to say, ‘portions of an early draft of this paper were produced with ChatGPT.’”

What came out of Pandora’s box cannot be put back in. Some students will fabricate their assignments and pass their classes. Many jobs will be replaced by artificial intelligence. Fear is a gut reaction to uncertainty, yet there is much promise in change.

The future of AI holds potential. Let us just hope it never passes the Turing test.

“Take responsibility. Yes, take advantage of the tool. But take responsibility. Your learning is yours,” Arias said.

Portions of this article were produced by ChatGPT. To see how ChatGPT works, check out this article that the program wrote about itself.

Will • Apr 21, 2023 at 1:39 pm

Back purple ink jellyfish thing in Sarasota