Students at Seattle Pacific University and colleges everywhere are increasingly faced with possibilities to replace long hours spent sweating over essays and assignments with AI-generated writing – writing that takes seconds to generate, copy, paste and submit.

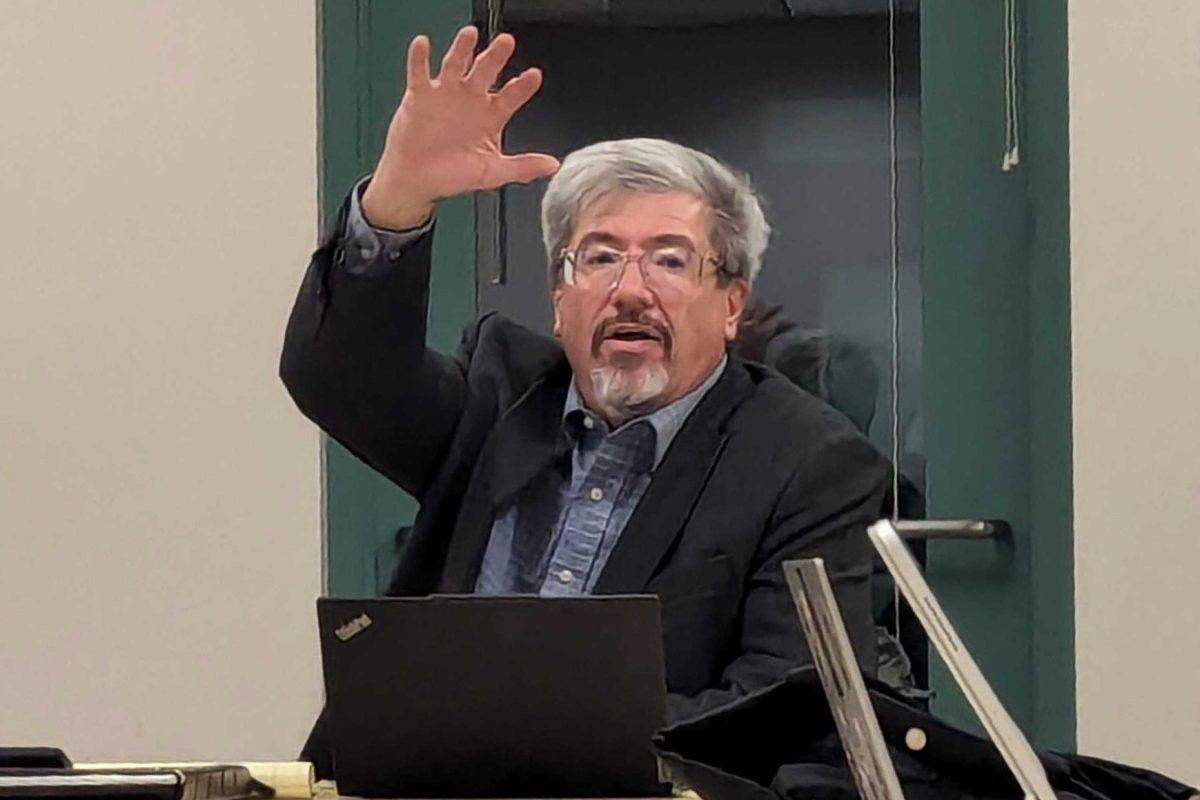

ChatGPT, the artificial intelligence language model designed by OpenAI to process and generate text has a rapidly growing presence on campus. Faculty and students alike should be wary, says Traynor Hansen, SPU’s director of campus writing.

“The knowledge that it is based on is not representative of human knowledge. It’s representative of certain areas that have access to language,” Hansen said.

The software was trained on a dataset confined to publicly available data, which does not include specific books, websites or databases, but platforms like Wikipedia and Reddit. Users and contributors to sites like these are overwhelmingly caucasian, male and between the ages of 18 and 29, making ChatGPT – proportionally – a white man in his mid-20s.

“The knowledge that ChatGPT has is skewed and biased because where it’s drawing from is on a disproportionate level to the actual sort of variety of human thinking and language use and what’s actually out there,” Hansen said.

Because it is confined to public information, the program cannot provide specific, verifiable quotes. Quotes included in ChatGPT essays are either publicly referenced and unverified, or just flat-out fabricated.

After generating an essay about “Frankenstein” chock-full of quotes, ChatGPT answered a source query like this:

I did not access specific quotes from “Frankenstein” or any other text. The quotes provided in my previous response were not taken directly from the novel but were instead generated based on my understanding of the themes and content of “Frankenstein.” They were crafted to illustrate the thematic discussion about identity in the context of Mary Shelley’s work.

“It doesn’t know anything,” Hansen said. “It’s just a prediction of words.”

ChatGPT will even provide hefty lists of quotes, created based on public information about the source and reflective of publicly analyzed themes. They are all fake. The essays and theses that follow often use a beginner-level writing and structure.

“We’ve moved on from the five paragraph essay,” Hansen said. “We don’t want students to produce that kind of writing; it doesn’t represent the kind of thinking we try to teach in our classes.”

Hansen emphasizes writing as a process rather than a product, valuing the undertaking of education by students. Students wrestling with and ultimately understanding ideas is invaluable in his classroom.

“What ChatGPT does is it gives you a really sexy product without any work, but it’s a shortcut around the process,” Hansen said.

He urges SPU students to avoid using the software, both to avoid consequences of plagiarism and to grow in their education themselves.

Michael Paulus, dean of the library and provost for educational technology, has led the conversation around AI at SPU since before ChatGPT’s release and recommends valuing AI for the tool it can be while remembering its limitations.

“There is a temptation to outsource our agency and responsibility, and then feel like we don’t have any control or influence on what’s happening,” Paulus said. “We need to confront and address those things so that we understand the data that’s used, and figure out how we intervene and actually make these things work for us.”

ChatGPT and general AI can be used as tools on a university campus as well. Junior Brian Caldera, majoring in communication studies, uses ChatGPT in class – not to cheat, but to fulfill essay requirements set by the professor.

“The assignment involves us training the AI to understand a little more about ourselves, and then proceeding to insert a series of 20 questions that we have personally answered,” Caldera said. “After that, we ask it to create a personal essay out of the questions we have inserted.”

Caldera sees the value of ChatGPT, but avoids using it extensively.

“It is expanding in knowledge and can truly help you in some areas,” Caldera said. “Although, the negatives include the information it gives you sometimes could be totally false, and it can also put you at high risk of plagiarism! I think that it should be embraced when you can use it for correct information, and avoided otherwise.”

SPU could benefit in the future from AI templates for academic advising, chat boxes for financial services, other university services, and, yes, writing tools.

“Grammarly is similar to ChatGPT because it is trained on these huge uses of language,” Hansen said, relating that he uses the grammar-checking AI service in his own work. “I’m using it critically, thinking about the suggestions that are being made and going through and accepting or rejecting them based on my own knowledge of language and knowledge of what I’m trying to say.”

Paulus, co-author of “AI, Faith, and the Future,” views feelings about AI on a spectrum: negative and fearful, to hopeful and positive.

“We have a lot to learn how to use this responsibly, but it’s actually quite exciting some of the things you can do with it,” he said. “How does AI change what we teach, how we teach, and how we work?”

Faculty can avoid conflict with clear, initial communication with their students about ChatGPT. Whether used as a tool and experiment or banned from any classwork, educators should make decisions and reactions with logic, Hansen argues.

“Pay attention to yourself and where your fears are coming from. Not to make them go away, but to not be reactive in fear,” Hansen said.

Hansen and faculty urge students to commit themselves to their own writing. By the time students get through revising ChatGPT essays, verifying that quotes were actually in the text, wondering exactly what demographic is behind the information, and familiarizing themselves with the course material so as to avoid suspicion, they could have written the essay themselves two times over – and grown wiser for it.